Multilingual Conceptual Coverage in Text-to-Image Models

Michael Saxon, William Yang Wang

University of California, Santa Barbara

[abs] [pdf] [github] [demo]

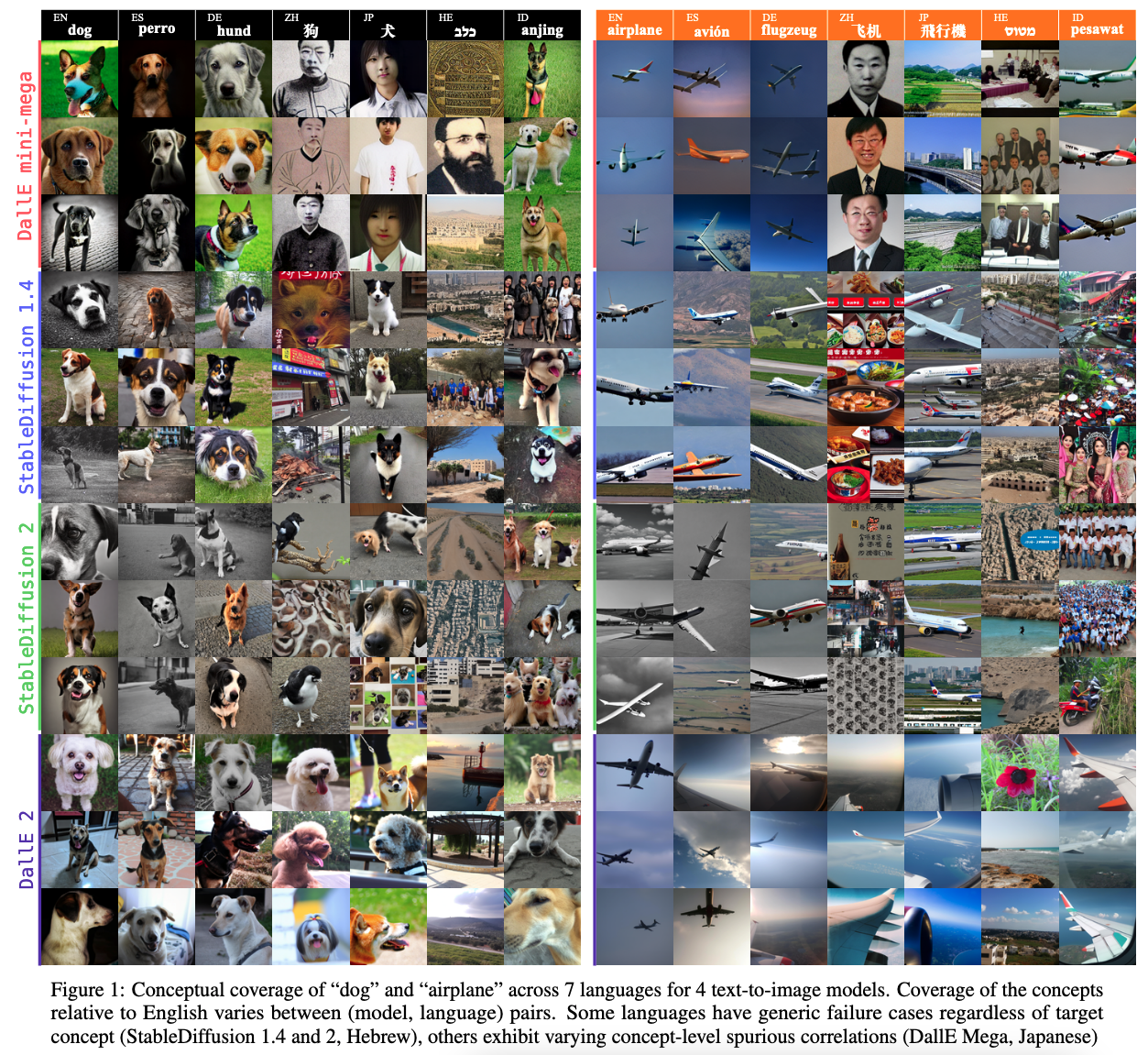

Abstract: We propose "Conceptual Coverage Across Languages" (CoCo-CroLa), a technique for benchmarking the degree to which any generative text-to-image system provides multilingual parity to its training language in terms of tangible nouns. For each model we can assess conceptual coverage of a given target language relative to a source language by comparing the population of images generated for a series of tangible nouns in the source language to the population of images generated for each noun under translation in the target language. This technique allows us to estimate how well-suited a model is to a target language as well as identify model-specific weaknesses, spurious correlations, and biases without a-priori assumptions.

[CoCo-CroLa Demo Main Page]

Correctness-sorted model outputs

The following links contain the correctness-sorted results for images generated from CoCo-CroLa v0.1 concepts in English, Spanish, German, Chinese, Japanese, Hebrew, and Indonesian, generated by the following models:

- DallE 2 results

- CogView 2 results (Trained on Chinese)

- DallE mega results

- DallE mini results

- StableDiffusion 1.1 results

- StableDiffusion 1.2 results

- StableDiffusion 1.4 results

- StableDiffusion 2 results

On each of the above pages, you can sort by language correctness by clicking anywhere on the corresponding language's column in the table.

Supplemental Experiments:

Additionally, we provide the full generated outputs for the supplementary experiments from our experiments described in Section 6 of our paper: